“Transformations often don’t feel that disruptive early on in an exponential curve then suddenly they feel very disruptive.”

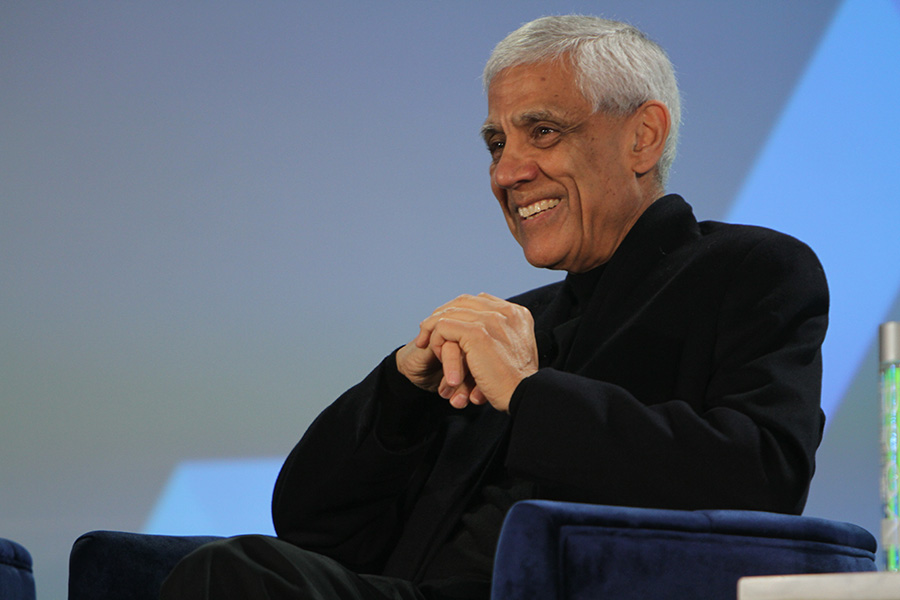

Vinod Khosla shared that wisdom with attendees of the Health Evolution Executive Briefing Artificial Intelligence and Machine Learning. Khosla, founder of Khosla Ventures and a famed entrepreneur who co-founded Sun Microsystems, was joined by Steve Klasko, MD, President & CEO, Thomas Jefferson University and Jefferson Health. The two discussed the future of AI development, the potential impact on COVID-19 on this technology and more.

Khosla was talking about COVID-19 when he said that quote but quickly turned that thought into a discussion on AI development. He said disruptions in AI will come in increments and by the time we get to 25 years from now, everything doctors rely on for judgement will be done by machines. It will not, however, replace the human aspect of providing care. Klasko agreed.

“Any doctor that can be replaced by a computer should be. When you think about it, we still accept medical students based on science, GPA, MCAT scores, and organic chemistry grades and somehow, we’re amazed doctors aren’t more empathetic, communicative and creative,” Klasko said.

Khosla sets a timeline

In three years, Khosla said we’ll see the beginnings of Erik Brynjolfsson’s quote in 2017, “Artificial intelligence won’t replace managers but managers who use AI will replace managers that don’t.” Moreover, he said there will be disparities between the health systems and organizations that use AI and those that do not.

Incumbents in health care could be in trouble since those are the organizations that usually get disrupted by technological transformation. “Nobody could design electric cars like Tesla because they broke tradition,” Khosla said.

Retail is another example where this is true, although Klasko said the big box chains, like Walmart and Target, may provide a source of inspiration for health care organizations. “They’re competing with Amazon on the e-side, but they also have stores. We’re always going to have to take care of patients with pancreatic cancer in a hospital,” Klasko said.

By the end of the 2020s, Khosla said AI will have to adapt different kinds of patient data to explainable information that a doctor can understand. The AI will learn from different doctors and experts, he said, to create individual care plans for patients.

“Good AI systems will take the expertise of all experts, aggregate them into a consistent treatment paradigm, and the data will be much more detailed,” he predicted.

The future of pandemic disease control and public health

In the future, Khsola said that different types of data could help predict who is going to get a viral disease during a pandemic. Research is being done on this front at Stanford Medical, he noted, although it’s still in the early stage. For instance, he says doctors could use heart rate variability (HRV) data in the future, combined with other information, to detect oncoming illnesses. The big issue will be to separate the coronavirus from the flu and other illnesses.

This isn’t just true for viral infections during a pandemic, but all diseases, Khosla said. The AI systems beyond the 2020s will incorporate genomic and other data to the point where it will predict diseases in patients’ years in advance. He said work is already being done in this regard, especially in oncology.

“The idea of symptom-based medicine will disappear in 15 years. You shouldn’t be reacting to symptoms. Disease is complex, it can be detected early. Symptom-based medicine is too late and should mostly disappear. When you break a bone, you’re still breaking a bone,” he said.

In other matters of public health, Khosla said that AI will help reduce health inequities and fix biases in care. In fact, he boldly predicted that “health equity will only come from AI” at a lower cost.

“Bias in AI comes from bias in humans. That bias exists because humans are biased against billing or they’re biased against women, when she says she has pain, they dismiss it more easily than if a man says it. Or they’re biased by ethnicity or something else. AI has exposed the bias of humans and made it fixable. Bias exists in our systems, but it’s explicit instead of being complicit and bias being explicit makes it easier to be fixed,” he said.